Machine learning models serve as effective instruments for generating predictions, yet certain models are particularly distinguished by their precision and dependability. One of the most effective techniques used today is Gradient Boosting, and its improved version, XGBoost. In order to fully grasp these ideas and progress in your career, enroll in the Data Science Courses in Bangalore at FITA Academy and acquire practical experience with the most recent machine learning methods.

What is Gradient Boosting?

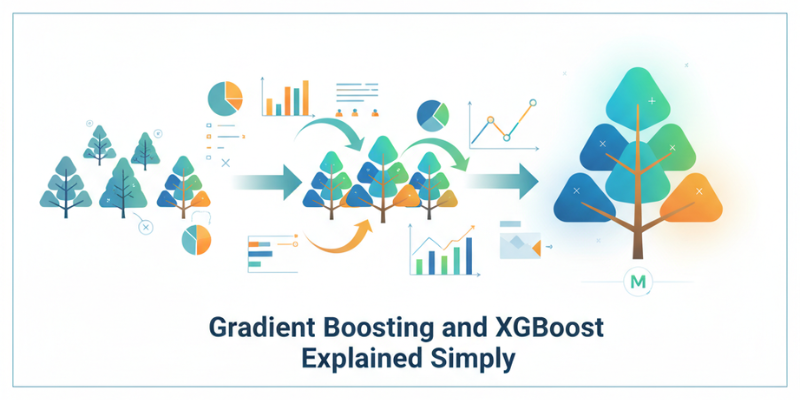

Gradient Boosting is an ensemble learning method, which means it combines several weak models to create one strong predictive model. In most cases, the weak models are decision trees. Every tree attempts to amend the mistakes made by those before it, gradually improving the overall performance.

Imagine you are trying to guess someone’s age. Your first guess might be far off, but each time you get feedback, you adjust your guess closer to the right answer. Gradient Boosting works in a similar way. It builds one model at a time, learns from its mistakes, and keeps refining until it reaches better accuracy.

How Gradient Boosting Works

The process begins with a simple model that makes an initial prediction. The algorithm then looks at the errors, also known as residuals, and builds another model focused on minimizing those errors. This process continues for many iterations, with each new model correcting the previous one. To learn this powerful technique in detail, consider joining a Data Science Course in Hyderabad and take your skills to the next level.

Over time, the combined result of all these small models becomes a highly accurate predictor. The term “gradient” comes from gradient descent, which is a mathematical method used to minimize the error. Essentially, the algorithm moves step by step in the direction that reduces the loss function, improving the model with each step.

What Makes XGBoost Special

XGBoost stands for Extreme Gradient Boosting. It is an advanced and optimized version of Gradient Boosting designed for speed and performance. While the basic idea remains the same, XGBoost includes several enhancements that make it faster, more efficient, and less prone to overfitting.

One of its key strengths is regularization, which controls the complexity of the model. This feature prevents the model from fitting too closely to the training data and improves its performance on unseen data. For those looking to master such advanced techniques, enrolling in a Data Science Course in Ahmedabad can provide hands-on experience with tools like XGBoost. Additionally, XGBoost supports parallel processing, allowing it to train models much faster compared to traditional Gradient Boosting methods.

Why XGBoost is So Popular

XGBoost has become one of the most widely used algorithms in data science competitions and real-world applications. It offers high accuracy, handles missing values effectively, and works well with both small and large datasets. Companies use it for tasks like predicting customer churn, detecting fraud, and making sales forecasts.

Its popularity also comes from its versatility. XGBoost can handle classification, regression, and ranking problems, making it a go-to choice for many data scientists and machine learning engineers.

Gradient Boosting and XGBoost are powerful techniques that transform weak learners into strong predictive models. For those seeking to learn these methods in depth, a Data Science Course in Gurgaon offers comprehensive training. Gradient Boosting focuses on building accuracy step by step, while XGBoost enhances this process with regularization and computational efficiency.

If you are learning data science or working on real-world prediction problems, understanding these algorithms is essential. They not only deliver great results but also give you insight into how modern machine learning models learn and improve over time.

Also check: How to Leverage Pre-Trained Models in Data Science